June 6, 2025

Beyond Nvidia: The Hidden AI Infrastructure Companies on NASDAQ

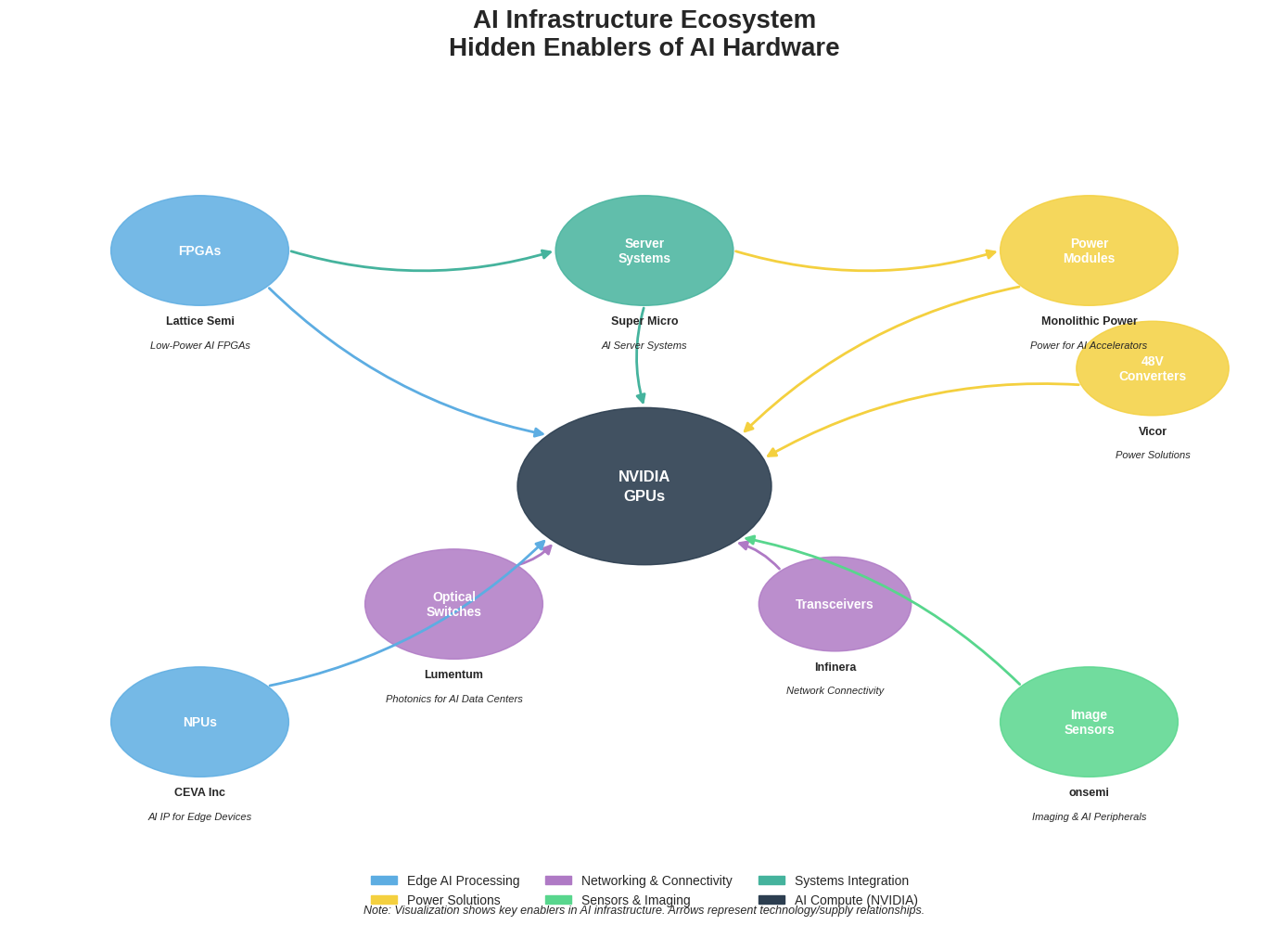

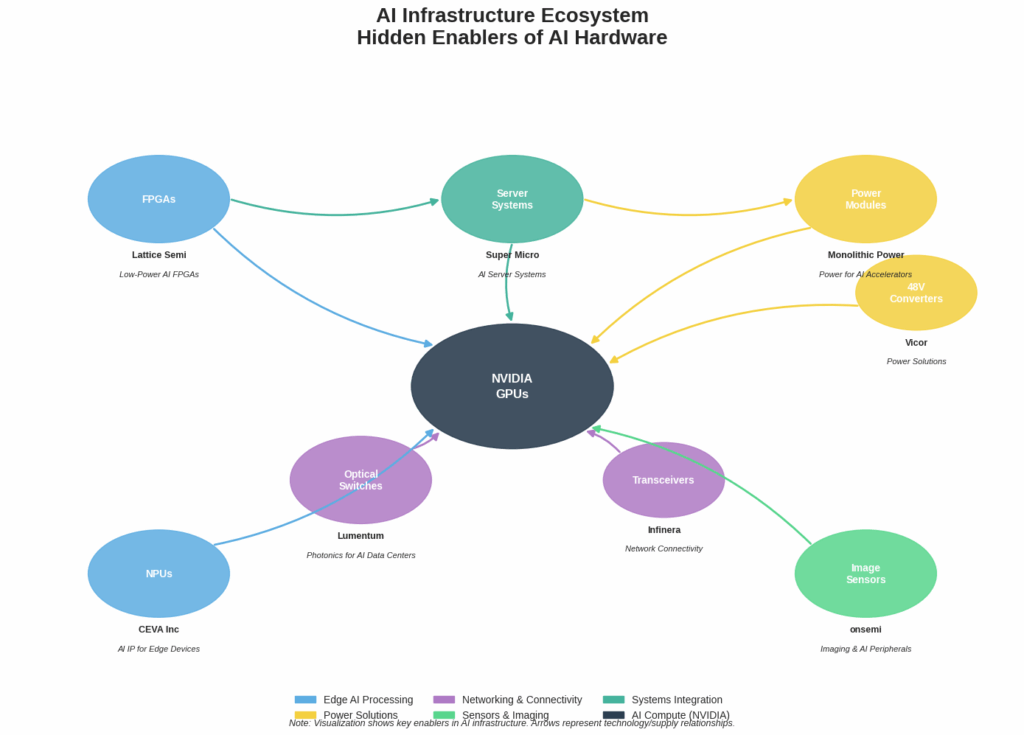

Investors hunting NASDAQ AI stocks will find that much of the AI supply chain relies on niche hardware suppliers and chip firms beyond the usual megacaps. These smaller firms make the specialized components – from FPGAs and AI NPUs to power modules and optical switches – that enable modern AI data centers and edge devices. Below, we profile several under-the-radar NASDAQ-listed companies in semiconductors, systems, and networking. For each, we note its AI relevance, business niche, recent financial context, and stock performance trends, and how it might fit in AI-themed NASDAQ ETFs.

Lattice Semiconductor (NASDAQ: LSCC) – Low-Power AI FPGAs

Lattice builds low-power FPGA chips for embedded and edge applications. Its FPGAs enable vision, sensor-fusion and AI inference tasks in space- or power-constrained systems. Lattice highlights its role in “advanced embedded vision, artificial intelligence, and sensor fusion” in industrial and IoT markets. For example, Lattice recently showcased how its small FPGA demos enable power-efficient robotics and automation AI at trade events. In short, Lattice’s programmable chips let device makers add AI capabilities (e.g. CNN accelerators) without heavy GPUs, carving out a niche as a “low power programmable leader”.

Financials & Stock: Lattice is a small-cap with market cap in the low billions. Its stock was volatile in 2023–24: it peaked near $97 in August 2023 but fell to ~$46 by mid-2025. (The 3-year total return has been roughly flat to modest, on par with the broader semiconductor sector.) Analysts track Lattice as an edge-AI play. Notably, the iShares Robotics & AI ETF (IRBO) even lists LSCC among its top holdings, reflecting Lattice’s stature in robotic/AI hardware. (Disclosure: IRBO is a NASDAQ-listed ETF with heavy exposure to AI infrastructure plays.)

CEVA, Inc. (NASDAQ: CEVA) – AI IP for Edge Devices

CEVA licenses DSP and AI processor intellectual-property (IP) blocks used in SoCs. Its IP cores include neural processing units (NPUs) and sensor processors for low-power embedded AI. For example, CEVA’s NeuPro NPU family targets AI inference in cameras, wearables and smart sensors. A recent Nasdaq/PR release notes that CEVA has built an “embedded AI ecosystem” around its NeuPro-Nano NPUs, adding partnerships that provide pre-optimized neural-network models for functions like face recognition and speech spotting. In practice, CEVA’s IP can be integrated into chips for smartphones, security cameras, and other devices, bringing AI to the “Smart Edge” without full-fledged GPUs.

Financials & Stock: CEVA’s revenues are modest (tens of millions per quarter), but analysts have been generally optimistic. The average 12-month analyst price target is around $38 (as of May 2025), reflecting bullish sentiment on CEVA’s growth. Its stock has climbed significantly in recent years – roughly a +40% gain over three years (outpacing the S&P) – as investors price in demand for edge-AI chips. (By comparison, over the same period the S&P 500 returned ~46%.) In sum, CEVA is a play on AI semantics: it doesn’t make GPUs, but it supplies the AI brainpower inside many sensors and SoCs via IP licensing.

Monolithic Power Systems (NASDAQ: MPWR) – Power for AI Accelerators

Monolithic Power designs high-efficiency power management chips, notably 48V-to-12V DC-DC converters. These chips sit on the back of AI GPUs and accelerators, converting datacenter power rails to the voltages GPUs need. Industry reports note that Monolithic’s 48 V power modules are “used by many AI chip modules” in data centers. In other words, Monolithic quietly supplies the power regulators under systems of NVIDIA or AMD GPUs. Even as Nvidia drove the AI boom, MPS provided much of the voltage regulation board-level hardware behind the scenes. Although there was a late-2024 rumor of potential share loss in Nvidia’s next-gen chips, analysts at Oppenheimer reiterated that Monolithic should retain a “dominant AI accelerator power share” in 2025.

Financials & Stock: MPS is a mid-cap leader in high-end power ICs. The stock rallied dramatically through 2021–2024 (at one point near a $900 price target) but has pulled back from its August 2024 peak as concerns surfaced. It still trades many times earnings (forward P/E ~50), reflecting its growth profile. Over 3 years, MPS shares have gained roughly +50–60% (outperforming the market). Consensus view is cautiously positive: analysts see ongoing demand for efficient power in AI servers and recently reaffirmed “Outperform” calls. (Note: a Nasdaq-featured Motley Fool piece likewise highlights MPS’s critical role supplying 48V chips in GPU modules.)

(Rival Vicor Corp. (NASDAQ: VICR) also makes 48V power modules for AI servers, but analysts rank Monolithic as the market-share leader. Vicor is worth watching – its high-efficiency converters likewise target next-gen AI racks.)

onsemi (NASDAQ: ON) – Image Sensors & AI Peripherals

onsemi (formerly ON Semiconductor) is best known for analog/sensor chips. Its “Hyperlux” image sensors are designed for demanding AI vision systems. For example, onsemi recently announced that its Hyperlux AR0823AT image sensor will be the primary “eyes” in Subaru’s next-generation EyeSight stereo-vision system. These sensors feed high-quality video into Subaru’s AI driving algorithm, enabling advanced driver-assist features. The press release emphasizes that onsemi’s sensors capture high-dynamic-range images and are “tailored to capture the critical visual data required” for automotive AI. In short, onsemi supplies the front-end cameras and sensors that real-world AI systems rely on (from cars to factory robots).

Financials & Stock: onsemi is a larger-cap (>$40B market cap) and diversified across auto, industrial, and cloud markets. Lately its AI/sensor business has gained focus. Its stock has rallied roughly +20% over the past 3 years, buoyed by strong auto and power demand (vs. +45% for S&P 500). Still, onsemi often flies under the radar in AI discussions. Investors can view onsemi as a “semiconductor stock for AI” in the sense that its chips feed AI systems – a contrast to pure-play AI-chip firms.

Lumentum (NASDAQ: LITE) – Photonics for AI Data Centers

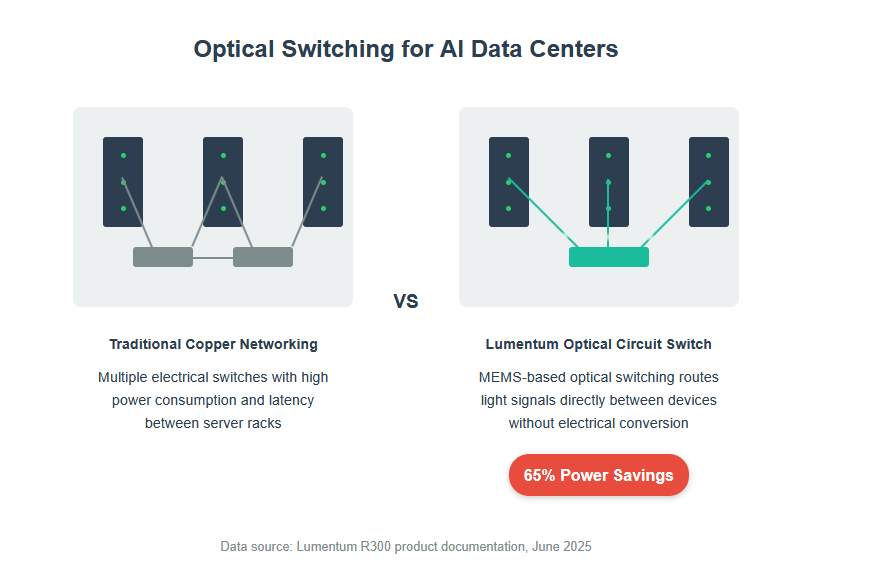

Lumentum makes optical and photonic components critical to cloud data-center networks. Its new R300 optical circuit switch (OCS) product is explicitly targeted at AI clusters. According to a Feb 2025 press release, the R300 “is a breakthrough solution designed to enhance the scalability, performance, and efficiency of artificial intelligence (AI) clusters and intra-data-center networks”. This MEMS-based optical switch can dynamically route optical signals between hundreds of devices without going through slower electrical switches. The company claims the OCS can reduce network power in large AI deployments by ~65% compared to traditional Ethernet/InfiniBand fabrics. (Citing a Cignal AI analyst, Lumentum notes that hyperscalers see optical switching as “critical” for scaling AI infrastructure.) In effect, Lumentum helps hyperscalers link GPUs with high-bandwidth optics, a unique slice of the AI stack.

Financials & Stock: Lumentum is mid-cap (~$4–5B market cap) in photonics. Its stock had a big run in 2024 (+86% one-year) but is flat over three years. LITE trades around ~$60 (June 2025) after correcting from its 2023 highs. The valuation is rich (forward P/E in 50s), reflecting optimism in high-speed optics demand. As AI servers proliferate, Lumentum is well-positioned, and it is often cited in the context of “AI infrastructure” solutions.

Super Micro Computer (NASDAQ: SMCI) – AI Server Systems

Supermicro builds the actual server hardware that runs AI models. It offers GPU-accelerated servers, chassis, and even liquid-cooling modules for AI datacenters. For example, Reuters reported that Supermicro is supplying the liquid-cooled GPU server racks for Elon Musk’s xAI supercomputer. Supermicro’s close ties with chipmakers (notably Nvidia) and its broad portfolio of GPU-dense systems make it a hidden node in AI’s supply chain. Its machines pack 8–10 Nvidia GPUs (H100s, GH200s, etc.) with high-bandwidth interconnects and specialized cooling.

Financials & Stock: SMCI’s revenue is roughly ~$10B annually, mostly from servers/storage for hyperscalers. The stock skyrocketed in 2022–23 (reflecting AI demand) and has since seen volatility. Over the past three years, it rose dramatically (peaking in 2023) then pulled back; it remains well above pre-2021 levels. Supermicro is often called an “AI hardware play” – if the data center AI boom continues, it will likely be in high demand. (It’s not cheap: forward P/E ~60.) But investors see SMCI as one of the few pure pure-plays on AI server gear outside Nvidia/AMD.

AI ETFs on NASDAQ: What’s Inside?

Many NASDAQ-listed thematic ETFs bundle these niche names. For example, the iShares Robotics & AI Multisector ETF (IRBO on Nasdaq) includes Lattice Semiconductor as a top 5 holding. The First Trust Nasdaq Artificial Intelligence & Robotics ETF (ROBT) and Global X Robotics & Artificial Intelligence ETF (BOTZ) similarly invest in mid-cap AI infrastructure companies. In general, NASDAQ AI-focused ETFs (BOTZ, ROBO, IRBO, etc.) often hold smaller chip, sensor and hardware stocks along with the big names. This provides diversified exposure – investors get a slice of the “AI supply chain” beyond Nvidia. For instance, as of 2019 IRBO’s top holdings included Snap, Lattice, iQIYI and others (CLDR, SNAP, LSCC, SSYS, IQ). These ETFs can be a way to indirectly play many of the above firms within a single Nasdaq-listed fund.

Key Takeaways: Investors seeking NASDAQ semiconductor stocks for AI should look beyond the usual giants. Lattice (LSCC) and CEVA (CEVA) supply the AI brains in FPGAs and NPUs at the edge. Monolithic Power (MPWR) and Vicor (VICR) supply the 48V power modules under AI GPUs. onsemi (ON) provides the imaging sensors feeding AI vision systems. Lumentum (LITE) and Infinera (INFN) furnish the optical switches and transceivers that interconnect GPUs. Server vendors like Supermicro (SMCI) deploy these components at scale. And AI ETFs on Nasdaq (e.g. Global X BOTZ, iShares IRBO, First Trust ROBT) often include these names, giving broad exposure to AI infrastructure. Each of these companies plays a unique role in the AI hardware ecosystem, and their NASDAQ-listed stocks offer a way for investors to go “beyond Nvidia” into the hidden infrastructure powering the AI revolution.

Sources: Authoritative filings and news: company press releases and interviews; plus analyst and ETF reports.